Over the past ten years, artificial intelligence (AI) has advanced rapidly, altering how we create, communicate, and even make judgments. Large AI systems have received a lot of attention, but modest language models are also quietly making a significant impact. Although these AI systems are smaller than larger versions like GPT-4 or Gemini, they are surprisingly powerful for their size. They can function without requiring massive servers or limitless data, and they are quicker and simpler to operate.

This essay will examine the unique features of tiny language models, their growing popularity, and how they may help make AI more widely available.

Understanding Small Language Models

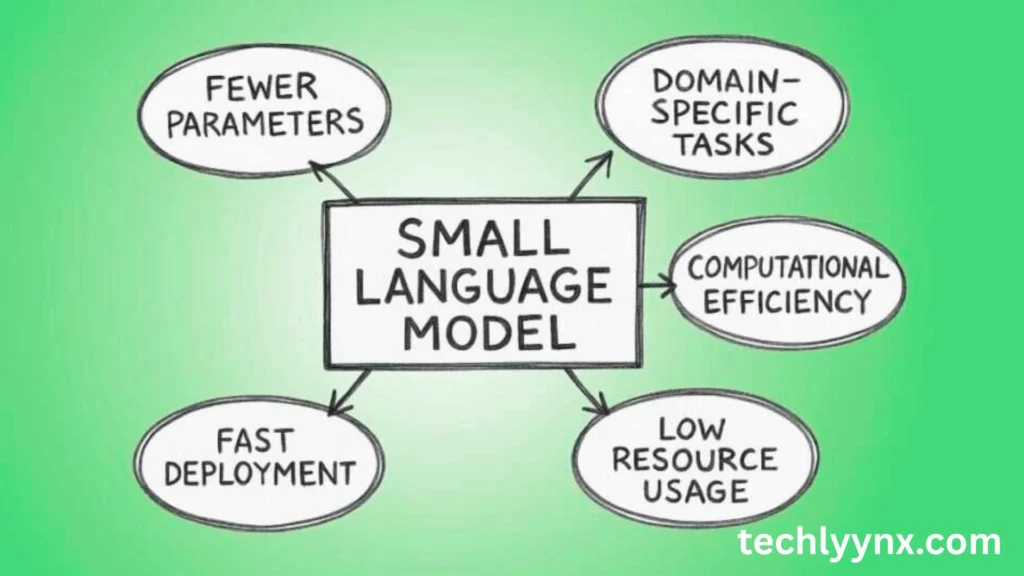

In the field of artificial intelligence, little language models are similar to compact cars in that they can still get you where you want to go even though they lack the capability of huge models. To put it simply, a tiny language model is an AI system that has been trained to comprehend and produce text that is similar to that of a human, but it is designed to be lightweight. This implies that it can operate on smaller devices and requires less storage space and resources.

For instance, although simple models can operate on a laptop, smartphone, or even an embedded system inside a robot, larger AI models might need data centers and expensive graphics processors. Even though they might not be as capable of handling vast or complex jobs as large models, they are nevertheless ideal for many daily works.

Benefits of Small Language Models

Small language models are attractive for reasons other than their size. They are appealing to companies, developers, and regular users due to their many useful advantages. They are quicker in the first place because they are not required to process large amounts of data at once. This implies that they can respond quickly and in real time. Secondly, their operating costs are lower. They can run on inexpensive machines, whereas large models might be costly due to their high hardware needs and energy consumption.

Privacy is still another important advantage. It’s simpler to execute AI locally with modest language models rather than transferring data to the cloud. For sectors where sensitive data must be protected, like healthcare or banking, this can be extremely important.Also, because they consume less energy, being more environmentally friendly compared to their larger counterparts.

How They Are Used in Everyday Life

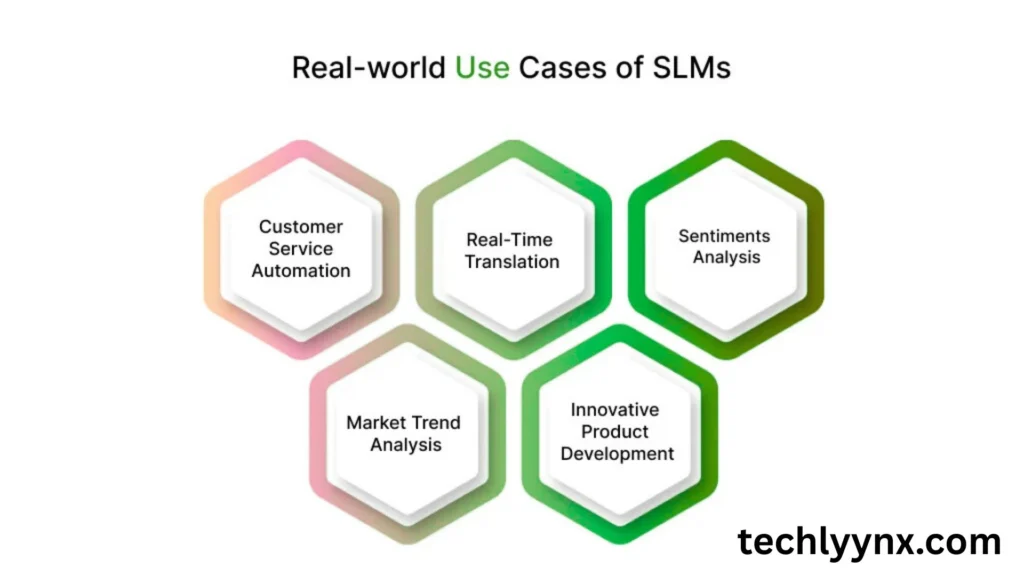

Small language models are already being used in ways you might not even notice. Here are some examples:

- Smartphone Assistance – Many phones now have built-in AI assistants that can work offline, thanks to small tech. They help with texting, reminders, and quick translations.

- Customer Service Bots – Businesses use small language models for chatbots that handle basic customer queries without needing a giant AI in the background.

- Smart Home Devices – Voice assistants in smart speakers often rely on small language models for quick responses to commands like “Turn on the lights” or “Play music.”

- Education Tools – Apps that help students learn new languages or solve math problems often use lightweight AI models to keep things fast and efficient.

- Accessibility Tools – Tools for people with disabilities, like text-to-speech converters or reading assistants, benefit from small language models that can run on personal devices.

Matter for Future

A significant change in the evolution of AI is shown by the emergence of tiny language tools. They are essential for the future for the following reasons:

Accessibility: They enable enterprises and individuals who cannot afford expensive hardware to use AI technology.

Scalability: Their lightweight design makes it simpler to incorporate them into a variety of devices and applications.

Sustainability: They are a more environmentally friendly option for AI development because they consume less electricity.

Freedom of Innovation: Small language models let smaller businesses and independent developers to test AI without incurring significant expenses.

Edge AI Growth: Small language models will become the preferred option as more devices handle data locally rather than in the cloud.

Challenges and Limitations

While small language models offer many advantages, they also face certain limitations. The biggest one is capacity — they can’t store as much knowledge as large models, meaning they might not handle very complicated or rare questions as effectively. Another challenge is accuracy. In some cases, they might produce less precise results compared to larger AI systems trained on massive datasets.

There’s also the issue of training data. To keep them lightweight, small language models often require a more focused set of training data, which can make them great at specific tasks but less flexible overall. However, researchers are actively working on techniques like model distillation and parameter optimization to bridge this gap, so the future looks promising.

The Road Ahead for Small Language Models

The next several years have the potential to be revolutionary, and the adventure of small language models is only beginning. Building the biggest, most potent computers was the main goal in the early days of artificial intelligence. Bigger isn’t necessarily better, though, as time proved. For a variety of applications, small language models could take the place of large-scale AI, much way smartphones did for many routine tasks.

The incorporation of little language models into commonplace gadgets is among the most fascinating advancements. Consider owning a washing machine that can recognize your preferences and recommend different washing settings without requiring an internet connection. Or consider wearable technology, such as smart glasses.

Another key trend is personalization. Large models are trained on huge datasets, which means they often give generic answers. Small language models, on the other hand, can be fine-tuned for specific users or industries. A small AI trained for a medical clinic, for example, could provide more relevant advice than a massive, general-purpose AI. The same goes for education, law, or creative industries.

Collaboration between small and large models

We’re also seeing collaboration between small and large models. In some systems, a small language model handles quick and simple requests, while a large model is used only for the most complex cases. This hybrid approach saves resources and makes AI more efficient.

From a business perspective, these models lower the barrier to entry for AI innovation. In the past, launching an AI-powered product often required millions of dollars in infrastructure. Now, with lightweight models, even a small startup can build an AI app and run it on everyday hardware.

However, the path forward isn’t without challenges. We’ll need to balance performance with privacy and ensure that remain transparent and fair. Bias in training data is a concern regardless of model size, and developers must take steps to address it.

The End

The goal of the emergence of small language models is ultimately to create a balanced ecosystem where many model sizes coexist and each performs the tasks for which it is most appropriate, rather than to replace large AI. In the future, AI will be faster, less expensive, and more individualized, affecting every aspect of our lives without constantly requiring a connection to a massive cloud server.